Introducing Harness AI Security

If your CISO asked how well you could secure your AI-native applications today, what would your answer be?

Most security teams would not be able to confidently answer that question today. While AI firewalls exist, they only protect the AI applications you already know about (they don’t address the problem of shadow AI), and only protect applications once they’re deployed in production. Today, we're announcing three new capabilities in beta to offer a different and more comprehensive approach to AI security:

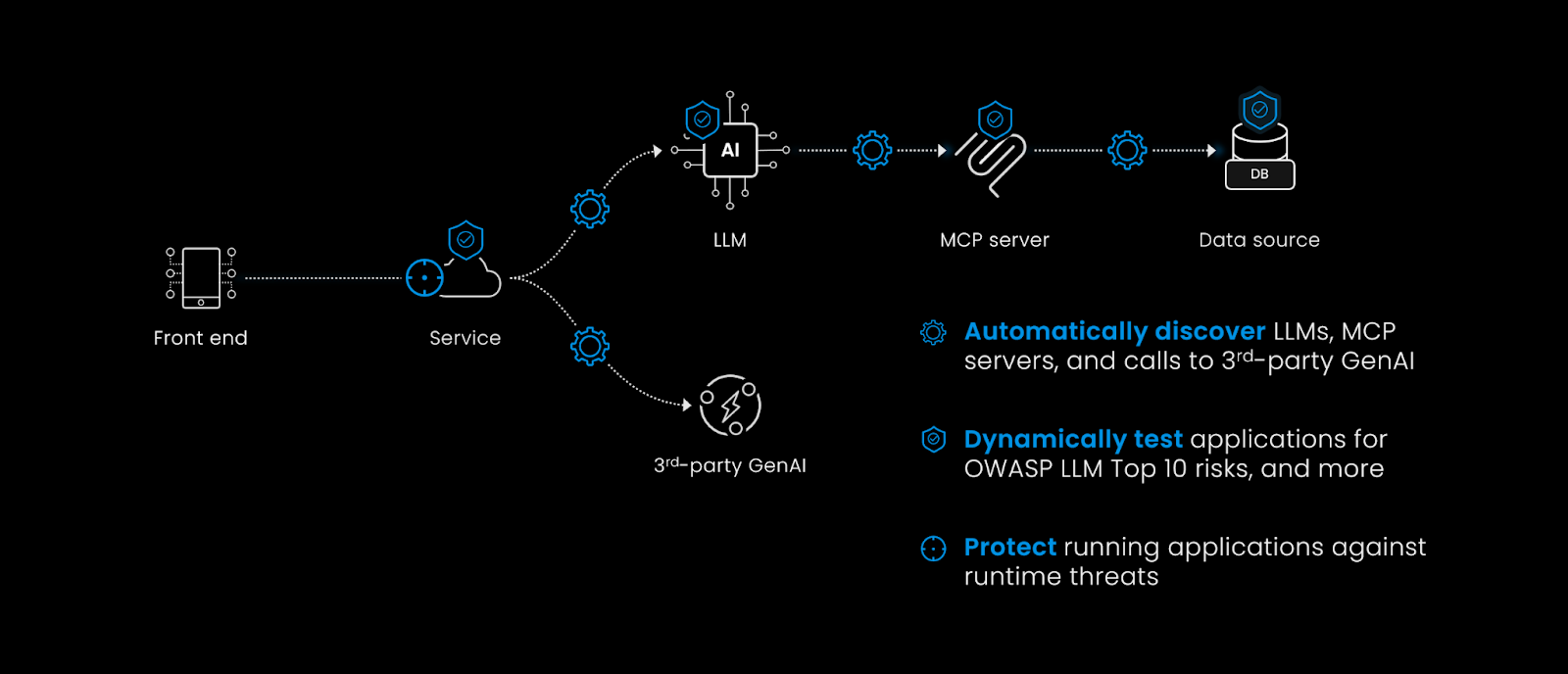

- AI Discovery: discovering all of the AI components and assets comprising your AI-native applications

- AI Testing: shifting left to test AI components earlier in the SDLC, before applications are deployed in production

- AI Protection: protecting AI-native applications deployed in production against evolving threats

A Different Approach: AI Security Requires API Security

API security provides an ideal foundation from which to secure AI-native applications. Most AI components and services communicate via APIs, especially in distributed or production environments. This means that every new AI client, MCP server, and LLM communicates through API endpoints - something your security teams have had time to understand. While AI threats are different, from an attack surface perspective, there is no AI security without API security.

That is why Harness built AI security on our industry-leading API security platform. We understand that protecting AI-native applications requires the same comprehensive approach that's worked for APIs: complete visibility into your attack surface, proactive vulnerability testing, and real-time threat protection.

AI Discovery: Addressing the Problem of Shadow AI

Just as with API security, AI security starts with discovery. You need to know what AI components you have and where they are before you can assess, understand, and mitigate the risk they present to your organization.

Harness already helps you automatically discover and inventory all of the APIs in your environment. We continually monitor and analyze your API traffic to catch new API endpoints as they’re first deployed, as well as updates to existing endpoints as they occur. And because AI components communicate through APIs, it’s a natural extension to help you recognize those newly deployed AI components as well.

How AI Discovery helps

AI discovery performs several essential tasks to help you understand your current AI risk:

- Identify AI components introduced to your application environment, including LLMs, MCP servers, MCP tools, AI model providers, and other AI functionality. This helps you understand what your AI attack surface looks like at any point in time.

- Identify calls to third-party Generative AI (GenAI) services like OpenAI, Anthropic, and Google. This helps you capture unauthorized use of external GenAI services and understand what sensitive data might be leaving your organization.

- Assess the runtime risk of your AI components, such as by identifying lack of encryption or weak authentication on AI APIs, unauthenticated APIs calling LLMs, the presence of sensitive data in prompts, and data privacy violations (e.g., regulated data sent to external models).

- Provide an up-to-date inventory of all AI assets, including both first-party API components and third-party AI services, so you have a complete list of everything in your environment at any time, in real time.

The ability to maintain an up-to-date AI inventory is increasingly becoming a requirement. Just as PCI DSS 4.0 introduced the requirement to maintain an up-to-date inventory of an organization’s API endpoints, the EU AI Act, ISO 42001, and emerging regulations may soon do the same for AI systems. In addition, API Discovery can help create compliance policies and help you manage the flow of sensitive data through your AI applications. Preparing for evolving compliance requirements helps AI-forward organizations manage future risk.

AI Testing: Shifting Left to Test for Risk Earlier in the SDLC

AI applications need fundamentally different testing approaches than traditional applications. Existing DAST tools were built to find traditional web application risks, such as SQL injections and XSS vulnerabilities. They were not designed to find the evolving types of risks in AI-native applications. For example, they can't test for prompt injection, system prompt leakage, or excessive agency in AI agents.

How AI Testing helps

AI Testing is specifically designed to test for and identify AI-specific risks in AI applications:

- Testing for AI-specific risks, such as those in the OWASP Top 10 Risks for LLM Applications. This includes risks such as prompt injection (LLM01), sensitive information disclosure (LLM02), system prompt leakage (LLM07), excessive agency, and more.

- Validating both inputs and outputs - an attacker exploiting prompt injection isn't attacking application code—they're using natural language to manipulate AI behavior, making it ignore instructions, leak information, or take unauthorized actions.

The economics of security say to catch vulnerabilities early. Finding a prompt injection during development costs an afternoon of engineering time. Finding it in production after a data leak costs legal fees, regulatory fines, and brand damage.

That's why AI testing needs to integrate into CI/CD pipelines. Automated testing on every commit gives developers immediate feedback, letting them fix AI-specific vulnerabilities before code ships. And by integrating with continuous AI discovery, you can test every AI component in your environment, including previously unknown shadow AI.

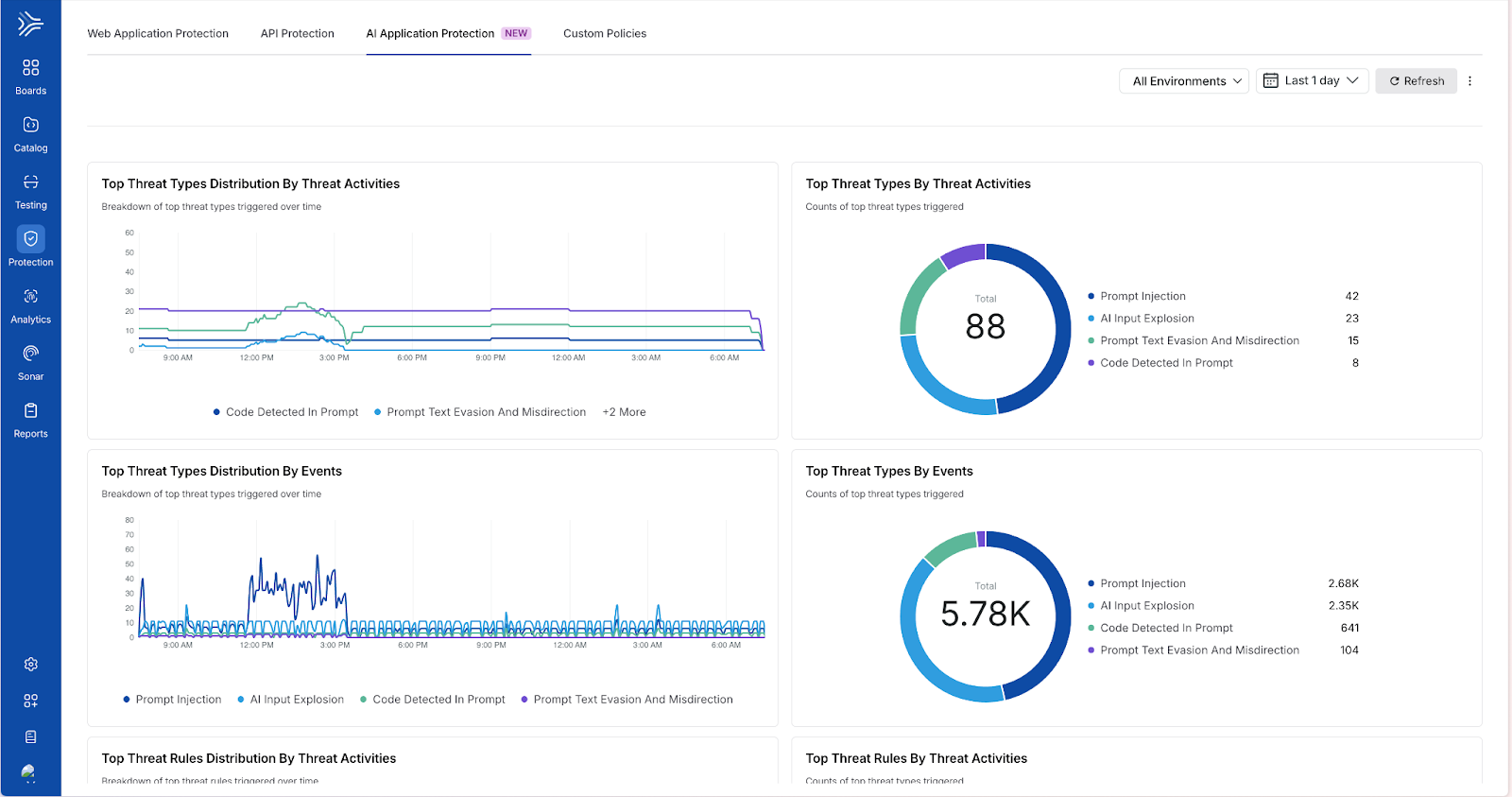

AI Protection: Protecting against Prompt Injections and More

Discovery tells you what you have. Testing tells you where the risks are and helps you shift AI security left and mitigate risks before deploying applications in production. However, production environments will always need an active defense against live attacks.

How AI Protection helps

AI threats are evolving fast, with new exploits being discovered daily. You need protection that adapts to changing threat patterns and understands request context. Because AI interactions flow through APIs, you can apply behavioral analysis techniques that work for API security—establish normal traffic baselines and detect anomalies that might indicate attacks, even novel techniques you've never seen.

A prompt injection attempt might look like a normal user query on the surface. Only by understanding the deep runtime context—how the request interacts with AI components, what data it accesses, what response it generates—can you distinguish legitimate use from malicious intent.

Real-time protection must address the full spectrum of AI-specific risks: detecting and blocking prompt injection before it reaches your LLMs, identifying when AI applications generate improper responses that leak sensitive data, and preventing misuse of excessive agency where AI agents attempt unauthorized actions.

Getting Started with Harness AI Security

Harness’ integrated approach to AI security helps ensure that you’re testing and protecting not just the AI components you know about (which can be very few) but also the ones you don’t. Starting with AI discovery and inventory gives you confidence that you have the full picture of your AI risk across your growing attack surface and evolving threat surface.

If your CISO asked how prepared you are to secure your AI-native applications, your answer would be that you could provide an inventory of every AI component in your production applications today, an assessment of your overall AI risk, and a means to protect your AI components against active threats in production.

All three capabilities—AI discovery, AI testing, and AI protection—are available today in beta and built on the same API security platform you already have. If your developers are building AI-native applications, and you don’t know where they are, contact your account manager today and ask how we can help.

The Inside Trace

Subscribe for expert insights on application security.

.avif)